Eyeon:Manual/Fusion 6/Renderer 3D

From VFXPedia

[ Main Manual Page ]

The Render 3D tool converts the 3D environment into a 2D image using either a default perspective camera, or else one of the cameras found in the scene. Every 3D scene in a composition should terminate with at least one Render 3D tool. The Renderer tool can use either of the software or OpenGL render engines to produce the resulting image. Additional render engines may also be available via third party plugins.

The Software render engine uses the systems CPU only to produce the rendered images. It is usually much slower than the OpenGL render engine, but produces consistent results on all machines making it essential for renders that involve network rendering. The software mode is required to produce soft shadows, and generally supports all available illumination, texture, and material features.

The OpenGL render engine employs the GPU processor on the graphics card to accelerate the rendering of the 2D images. The output may vary slightly from system to system, depending on the exact graphics card installed. The graphics card driver can also effect the results from the OpenGL renderer. The OpenGL render engines speed makes it possible to provide customized supersampling and realistic 3D depth of field options. Soft shadows cannot be rendered using the software renderer.

External Inputs

- Renderer3D.SceneInput

- [ orange, required ] This input expects a 3D scene.

- Renderer3D.EffectMask

- [ violet, optional ] This input uses a single or four channel 2D image to mask the output of the tool.

Controls

The Camera drop-down list is used to select which camera from the scene is used when rendering. The default option is Default, which will use the first camera found in the scene. If no camera is located the default perspective view will be used instead.

The Eye control tells the tool how to render the image in stereoscopic projects. The Mono option will ignore the stereoscopic settings in the camera. The Left and Right options will translate the camera using the stereo seperation and convergence options defined in the camera to produce either left or right eye outputs.

The first two checkboxes in this reveal can be used to determine whether the tool will print warnings and error produced while rendering to the console. The second row of checkboxes tells the tool whether it should abort rendering when a warning or error is encountered. The default for this tool enables all four checkboxes.

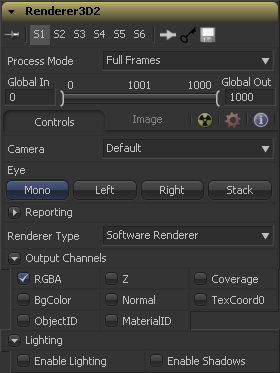

This drop down menu lists the available render engines. Fusion provides two the Software and OpenGL render engines (described above) and additional renderers can be added via third party plugins.

All of the controls found below this drop down menu are added by the render engine. They may change depending on the options available to each renderer. As a result each renderer is described in it's own section below.

Software Controls

In addition to the usual red, Green, Blue and Alpha channels the software renderer can also embed the following channels into the image. Enabling additional channels will consume additional memory and processing time, so these should be used only when required.

- RGBA

- This option tells the renderer to produce the red, green, blue and alpha color channels of the image. These channels are required and they cannot be disabled.

- Z

- This option enables rendering of the Z-channel. The pixels in the Z-channel contain a value that represent the distance of each pixel from the camera. Note that the Z-Channel values cannot include anti-aliasing. In pixels where multiple depths overlap, the frontmost depth value is used for this pixel.

- Coverage

- This option enables rendering of the coverage channel. The coverage channel contains information about which pixels in the z-buffer provide coverage (are overlapping with other objects). This helps tools that use the Z-buffer to provide a small degree of antialiasing. The value of the pixels in this channel indicate as a percentage how much of the pixel is composed of the foreground object.

- BgColor

- This option enables rendering of the BgColor channel. This channel contains the color values from objects behind the pixels described in the coverage channel.

- Normal

- This option enables rendering of the X, Y and Z Normals channels. These three channels contain pixel values that indicate the orientation (direction) of each pixel in the 3D space. Each axis is represented by a color channel containing values in a range from [-1,1].

- TexCoord

- This option enables rendering of the U and V mapping co-ordinate channels. The pixels in these channels contain the texture coordinates of the pixel. Although texture coordinates are processed internally within the 3D system as 3 component UVW, Fusion images only store UV components. These components are mapped into the Red and Green color channel.

- ObjectID

- This option enables rendering of the ObjectID channel. Each object in the 3D environment can be assigned a numeric identifier when it is created. The pixels in this floating point image channel contain the values assigned to the objects that produced the pixel. Empty pixels have an ID of 0, and the channel supports values as high as 65534. Multiple objects can share a single Object ID . This buffer is useful for extracting mattes based on the shapes of objects in the scene.

- MaterialID

- This option enables rendering of the ObjectID channel. Each material in the 3D environment can be assigned a numeric identifier when it is created. The pixels in this floating point image channel contain the values assigned to the materials that produced the pixel. Empty pixels have an ID of 0, and the channel supports values as high as 65534. Multiple materials can share a single Material ID . This buffer is useful for extracting mattes based on a texture - for example a mask containing all of the pixels which comprise a brick texture.

Lighting

When the Enable Lighting checkbox is selected, objects will be lit by any lights in the scene. If no lights are present, all objects will be black.

When the Enable Shadows checkbox is selected, the renderer will produce shadows, at the cost of some speed.

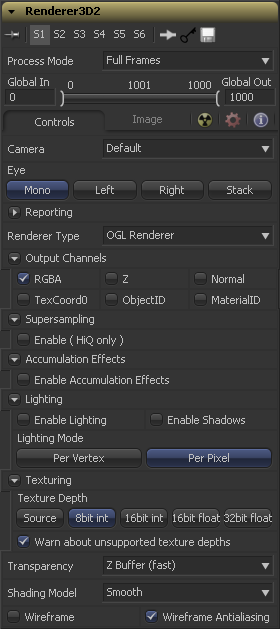

OpenGL Controls

In addition to the usual Red, Green, Blue and Alpha channels the OpenGL render engine can also embed the following channels into the image. Enabling additional channels will consume additional memory and processing time, so these should be used only when required.

- RGBA

- This option tells the renderer to produce the red, green, blue and alpha color channels of the image. These channels are required and they cannot be disabled.

- Z

- This option enables rendering of the Z-channel. The pixels in the Z-channel contain a value that represent the distance of each pixel from the camera. Note that the Z-Channel values cannot include anti-aliasing. In pixels where multiple depths overlap, the frontmost depth value is used for this pixel.

- Normal

- This option enables rendering of the X, Y and Z Normals channels. These three channels contain pixel values that indicate the orientation (direction) of each pixel in the 3D space. Each axis is represented by a color channel containing values in a range from [-1,1].

- TexCoord

- This option enables rendering of the U and V mapping co-ordinate channels. The pixels in these channels contain the texture coordinates of the pixel. Although texture coordinates are processed internally within the 3D system as 3 component UVW, Fusion images only store UV components. These components are mapped into the Red and Green color channel.

- ObjectID

- This option enables rendering of the ObjectID channel. Each object in the 3D environment can be assigned a numeric identifier when it is created. The pixels in this floating point image channel contain the values assigned to the objects that produced the pixel. Empty pixels have an ID of 0, and the channel supports values as high as 65534. Multiple objects can share a single Object ID . This buffer is useful for extracting mattes based on the shapes of objects in the scene.

- MaterialID

- This option enables rendering of the ObjectID channel. Each material in the 3D environment can be assigned a numeric identifier when it is created. The pixels in this floating point image channel contain the values assigned to the materials that produced the pixel. Empty pixels have an ID of 0, and the channel supports values as high as 65534. Multiple materials can share a single Material ID . This buffer is useful for extracting mattes based on a texture - for example a mask containing all of the pixels which comprise a brick texture.

Supersampling

Supersampling produces an output image with higher quality anti-aliasing by brute force rendering a much larger image, then rescaling it down to the target resolution. The exact same results can be achieved by rendering a larger image in the first place, then using a Resize tool to bring the image to the desired resolution. Using the supersampling built into the renderer offers two distinct advantages over this method:

- The rendering is not restricted by memory or image size limitations. For example, consider the steps to create a float16 1920 x 1080 image with 16x supersampling. Using the traditional resize tool would require first rendering the image with a resolution of 30720 x 17280 then using a resize to scale this image back down to 1920 x 1080. Simply producing the image would require nearly 4GB of memory. When supersampling is performed on the GPU the OpenGL renderer can use tile rendering to significantly reduce memory usage.

- The GL Renderer can perform the rescaling of the image directly on the GPU much more quickly that the CPU can manage it. In general, the more GPU memory the graphics card has the faster the operation will be.

Interactively Fusion will skip the supersampling stage unless the HiQ button is selected in the Time Ruler. Final quality renders will always include supersampling if it is enabled.

This checkbox can be used to enables supersampling of the rendered image. The remaining controls in this reveal will only appear if this is selected.

When this checkbox is disabled seperate sliders are presented to control the amount of supersampling on the X and Y axis.

The supersampling rate tells the OpenGL render how large to scale the image. eg. If the supersampling rate is set to 4 and the GL Renderer is set to output a 1920 x 1080 image, internally a 7680 x 4320 image will be rendered and then scaled back to produce the target image. Set the multiplier higher to get better edge antialiasing at the expense of render time. Typically 8x8 supersampling (64 samples per pixel) is sufficient to reduce most aliasing artifacts.

When downsampling the supersized image the surrounding pixels around a given pixel are often used to give a more realistic result. There are various filters available for combining these pixels. More complex filters can give better results, but are usually slower to calculate. The best filter for the job will often depend on the amount of scaling and on the contents of the image itself.

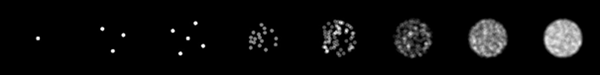

The functions of these filters are shown in the image above. From left to right these are:

The functions of these filters are shown in the image above. From left to right these are:

- Box - This is a simple interpolation scale of the image.

- Bi-Linear (triangle) - This uses a simplistic filter, which produces relatively clean and fast results.

- Bi-Cubic (quadratic) - This filter produces a nominal result. It offers a good compromise between speed and quality.

- Bi-Spline (cubic) - This produces better results with continuous tone images but is slower than Quadratic. If the images have fine detail in them, the results may be blurrier than desired.

- Catmul-Rom - This produces good results with continuous tone images which are scaled down, producing sharp results with finely detailed images.

- Gaussian - This is very similar in speed and quality to Quadratic.

- Mitchell - This is similar to Catmull-Rom but produces better results with finely detailed images. It is slower than Catmull-Rom.

- Lanczos - This is very similar to Mitchell and Catmull-Rom but is a little cleaner and also slower.

- Sinc - This is an advanced filter that produces very sharp, detailed results, however, it may produce visible `ringing' in some situations.

- Bessel - This is similar to the Sinc filter but may be slightly faster.

The Window Method menu appears only when the Reconstruction Filter is set to "Sinc" or "Bessel".

- Hanning - This is a simple tapered window.

- Hamming - Hamming is a slightly tweaked version of Hanning.

- Blackman - A window with a more sharply tapered falloff.

When this checkbox is disabled it is possible to set different values for the X and Y axis when setting the filter width.

This slider can be used to adjust the size of the filter kernel. The kernal describes how many of the pixels surrounding the current pixel are sampled to produce the scaled result. Decreasing the width of the filter causes the anti-aliasing in the resulting image to appear sharper while increasing it makes it seem blurrier.

Each type of filter has a default width setting. For example, a box filter by default uses a 1x1 kernel, a triangle filter is 2x2, a bi-cubic filter is 3x3, b-spline and catmull-rom use 4x4, while a gaussian filter is 3.34x3.34. The kernel width of the filter is essentially multiplied by the value set in this control.

This can be used as a mechanism to blur to the resulting image but it not recommended as its becomes increasingly inefficient for larger tweak multipliers and consumes significantly more resources. Larger values will often cause a graphics card to fail entirely. For values higher than 4 using a Blur tool on the output image will almost certainly be more efficient.

Accumulation Effects

Accumulation Effects are created by rendering multiple "subframes" to produce the final image. This technique allows depth of field rendering on the graphic hardware. Future versions of Fusion may also allow additional effects based on the accumulation technique.

This checkbox enables calculation of the Accumulation effects. While the OpenGL renderer currently provides only a Depth of Field accumulation, additional modes are planned for the future, so a global switch has been provided.

This checkbox enables Depth of Field rendering. Depth of Field is created by rendering sub-frame then combining them into a single frame. In each subframe the camera is rotated around a virtual target point. The distance to the target point is set by the Plane of Focus control in the Camera 3D tool. Pixels at the Plane of Focus will appear to be in focus.

If supersampling is enabled then each sub-frame will be produced using the supersampled size.

For reasons of efficiency the sub-frames created for the DOF effect are also used to produce motion blur when this option is enabled in the common controls tab (marked with the nuclear symbol). Effectively both features "share" sub-frames.

This control sets the number of sub-frames used to create the DoF calculation. As with motion blur, the value of the control is doubled internally, so setting this control to 2 actually produces 5 sub-frames (4 + 1 original). If both motion blur and DOF are enabled the number of sub-frames produced is determined by the higher quality value. This prevents the number of subframes produced from growing exponentially.

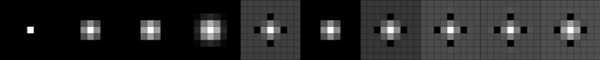

The following images shows the effect of DoF Quality on a small circle:

The value of the controls are from left to right:0, 1, 2, 8, 32, 128, 1024, 4096.

Defines how much the camera is moved per subframe from its original position. A higher value will result in a blurrier image or stronger DoF effect.

Lighting

When the Enable Lighting checkbox is selected, objects will be lit by any lights in the scene. If no lights are present, all objects will be black.

When the Enable Shadows checkbox is selected, the renderer will produce shadows, at the cost of some speed.

The per-vertex lighting model calculates lighting at each vertex of the scenes geometry. This produces a fast approximation of the scene's lighting, but tends to produce blocky lighting on poorly tesselated objects. The per-pixel method uses a different method that does not rely on the amount of detail in the scenes geometry for lighting, so generally produces superior results.

While using per-pixel lighting in the OpenGL renderer produces results closer to that produced by the more accurate software renderer, it still has some disadvantages. Even with per-pixel lighting, the OpenGL renderer is less capable of dealing correctly with semi transparency, soft shadows and colored shadows. The color depth of the rendering will be limited by the capabilities of the graphics card in the system.

The OpenGL renderer reveals this control for selecting which ordering method to use when calculating transparency.

- Z Buffer (fast)

- This mode is extremely fast, and is adequate for scenes containing only opaque objects. The speed of this mode comes at the cost of accurate sorting - only the objects closest to the camera are certain to be in the correct sort order. As a result semi-transparent objects may not be shown correctly, depending on their ordering within the scene.

- Sorted (accurate)

- This mode will sort all objects in the scene (at the expense of speed) before rendering, giving correct transparency.

- Quick Mode

- This experimental mode is best suited to scenes that almost exclusively contain particles.

Use this menu to select a Shading Model to use for materials in the scene. Smooth is the shading model employed in the views and Flat produces a simpler and faster shading model.

Renders the whole scene as wireframe. This will show the edges and polygons of the objects. The edges are still shaded by the material of the objects.

Enables anti-aliasing for the wireframe render.

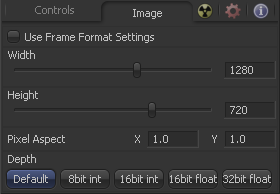

Image Tab

The controls in this tab are used to set the resolution, color depth and pixel aspect of the image produced by the tool.

Use this menu control to select the fields processing mode used by Fusion to render changes to the image. The default option is determined by the Has Fields checkbox control in the Frame Format Preferences. For more information on fields processing, consult the Frame Formats chapter.

Use this control to specify the position of this tool within the project. Use Global In to specify on which frame that the clip starts and Global Out to specify on which frame this clip ends (inclusive) within the project's Global Range.

The tool will not produce an image on frames outside of this range.

When this checkbox is selected, the width, height and pixel aspect of the image created by the tool will be locked to values defined in the composition's Frame Format preferences. If the Frame Format preferences change, the resolution of the image produced by the tool will change to match. Disabling this option can be useful to build a composition at a different resolution than the eventual target resolution for the final render.

This pair of controls is used to set the Width and Height dimensions of the image to be created by the tool.

This controls is used to specify the Pixel Aspect ratio of the created images. An aspect ratio of 1:1 would generate a square pixel with the same dimensions on either side (like a computer display monitor) and an aspect of 0.9:1 would create a slightly rectangular pixel (like an NTSC monitor).

The Depth button array is used to set the pixel color depth of the image created by the creator tool. 32bit pixels require 4 times the memory of 8bit pixels, but have far greater color accuracy. Float pixels allow high dynamic range values outside the normal 0..1 range, for representing colours that are brighter than white or darker than black. See the Frame Format chapter for more details.

Tips for Renderer 3D (edit)

| The contents of this page are copyright by eyeon Software. |