< Previous | Contents | Next >

The next step in this workflow involves the controls found in the Solve tab. Solving is a compute- intensive process in which the Camera Tracker analyzes the currently existing tracks to create a 3D scene. It generates a virtual camera that matches the live action and a point cloud consisting of 3D locators that recreate the tracked features in 3D space. The analysis is based on parallax in the frame, which is the perception that features closer to the camera move quicker than features further away. This is much like when you look out the side window of a car and can see objects in the distance move more slowly than items near the roadside.

The trackers found in the Track phase of this workflow have a great deal to do with the success or failure of the solver, making it critical to deliver the best set of tracking points from the very start. Although the masking you create to occlude objects from being tracked helps to omit problematic tracking points, you almost always need to further filter and delete poor quality tracks in the Solver tab. That’s why, from a user’s point of view, solving should be thought of as an iterative process.

1 Click the Solve button to run the solver.

2 Filter out and delete poor tracks.

![]()

3 Rerun the solver.

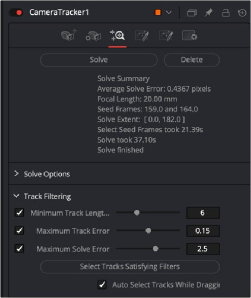

The Solver tab after it has run and produced an average solve error of 04367 pixels

How Do You Know When to Stop?

At the end of the solve process, an Average Solve Error (sometimes called a reprojection error) appears at the top of the Inspector. This is the crucial value that tells you how well the calculation has gone. A good Average Solve Error for HD content is below 1.0.

You can interpret a value of 1.0 as a pixel offset; at any given time, the track could be offset by 1 pixel. The higher the resolution, the lower the solve error should be. If you are working with 4K material, your goal should be to achieve a solve error below 0.5.

Tips for Solving Camera Motion

When solving camera movement, it’s important to provide accurate live-action camera information, such as focal length and film gate size, which can significantly improve the accuracy of the camera solve. For example, if the provided focal length is too far away from the correct physical value, the solver can fail to converge, resulting in a useless solution.

Additionally, for the solver to accurately triangulate and reconstruct the camera and point cloud, it is important to have:

— A good balance of tracks across objects at different depths, with not too many tracks in the distant background or sky (these do not provide any additional perspective information to the solver).

— Tracks distributed evenly over the image and not highly clustered on a few objects or one side of the image.

— The track starts and ends staggered over time, with not too many tracks ending on the same frame.

Tips for Solving Camera Motion

When solving camera movement, it’s important to provide accurate live-action camera information, such as focal length and film gate size, which can significantly improve the accuracy of the camera solve. For example, if the provided focal length is too far away from the correct physical value, the solver can fail to converge, resulting in a useless solution.

Additionally, for the solver to accurately triangulate and reconstruct the camera and point cloud, it is important to have:

— A good balance of tracks across objects at different depths, with not too many tracks in the distant background or sky (these do not provide any additional perspective information to the solver).

— Tracks distributed evenly over the image and not highly clustered on a few objects or one side of the image.

— The track starts and ends staggered over time, with not too many tracks ending on the same frame.

Tips for Solving Camera Motion

When solving camera movement, it’s important to provide accurate live-action camera information, such as focal length and film gate size, which can significantly improve the accuracy of the camera solve. For example, if the provided focal length is too far away from the correct physical value, the solver can fail to converge, resulting in a useless solution.

Additionally, for the solver to accurately triangulate and reconstruct the camera and point cloud, it is important to have:

— A good balance of tracks across objects at different depths, with not too many tracks in the distant background or sky (these do not provide any additional perspective information to the solver).

— Tracks distributed evenly over the image and not highly clustered on a few objects or one side of the image.

— The track starts and ends staggered over time, with not too many tracks ending on the same frame.