< Previous | Contents | Next >

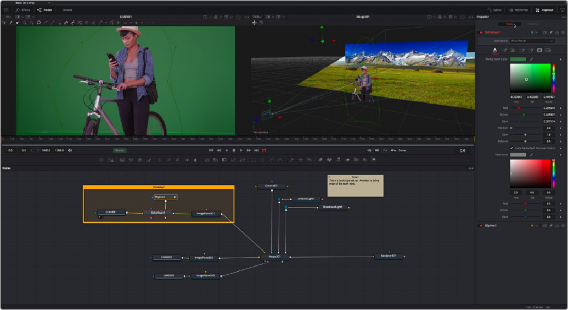

An Overview of 3D Compositing

Traditional image-based compositing is a two-dimensional process. Image layers have only the amount of depth needed to define one as foreground and another as background. This is at odds with the realities of production, since all images are either captured using a live-action camera with freedom in all three dimensions, in a shot that has real depth, or have been created in a true 3D modeling and rendering application.

Within the Fusion Node Editor, you have a GPU-accelerated 3D compositing environment that includes support for imported geometry, point clouds, and particle systems for taking care of such things as:

— Converting 2D images into image planes in 3D space

— Creating rough primitive geometry

— Importing mesh geometry from FBX or Alembic scenes

— Creating realistic surfaces using illumination models and shader compositing

— Rendering with realistic depth of field, motion blur, and supersampling

— Creating and using 3D particle systems

— Creating, extruding, and beveling 3D text

— Lighting and casting shadows across geometry

— 3D camera tracking

— Importing cameras, lights, and materials from 3D applications such as Maya, 3ds Max, or LightWave

![]()

— Importing matched cameras and point clouds from applications such as SynthEyes or PF Track

An example 3D scene in Fusion